2025.3.8: 🔥🔥🔥 AppAgentX is coming!!! The next generation of AppAgent is released!

2024.2.8: Added qwen-vl-max (通义千问-VL) as an alternative multi-modal model. The model is currently free to use!

2024: Evaluation benchmark used in AppAgent is released on Github.

22024.1.2: 🔥 Added an optional method for the agent to bring up a grid overlay on the screen to tap/swipe anywhere on the screen.

2023.12.26: Andriod emulator is supported for AppAgent! Try it even if you don't have an Android device.

2023.12.21: 🔥🔥 Open-source the git repository, including the detailed configuration steps to implement our AppAgent!

Recent advancements in large language models (LLMs) have led to the creation of intelligent agents capable of performing complex tasks. This paper introduces a novel LLM-based multimodal agent framework designed to operate smartphone applications. Our framework enables the agent to operate smartphone applications through a simplified action space, mimicking human-like interactions such as tapping and swiping. This novel approach bypasses the need for system back-end access, thereby broadening its applicability across diverse apps. Central to our agent' s functionality is its innovative learning method. The agent learns to navigate and use new apps either through autonomous exploration or by observing human demonstrations. This process generates a knowledge base that the agent refers to for executing complex tasks across different applications. To demonstrate the practicality of our agent, we conducted extensive testing on 50 tasks across 10 different applications, including social media, email, maps, shopping, and sophisticated image editing tools. The results affirm our agent's proficiency in handling a diverse array of high-level tasks.

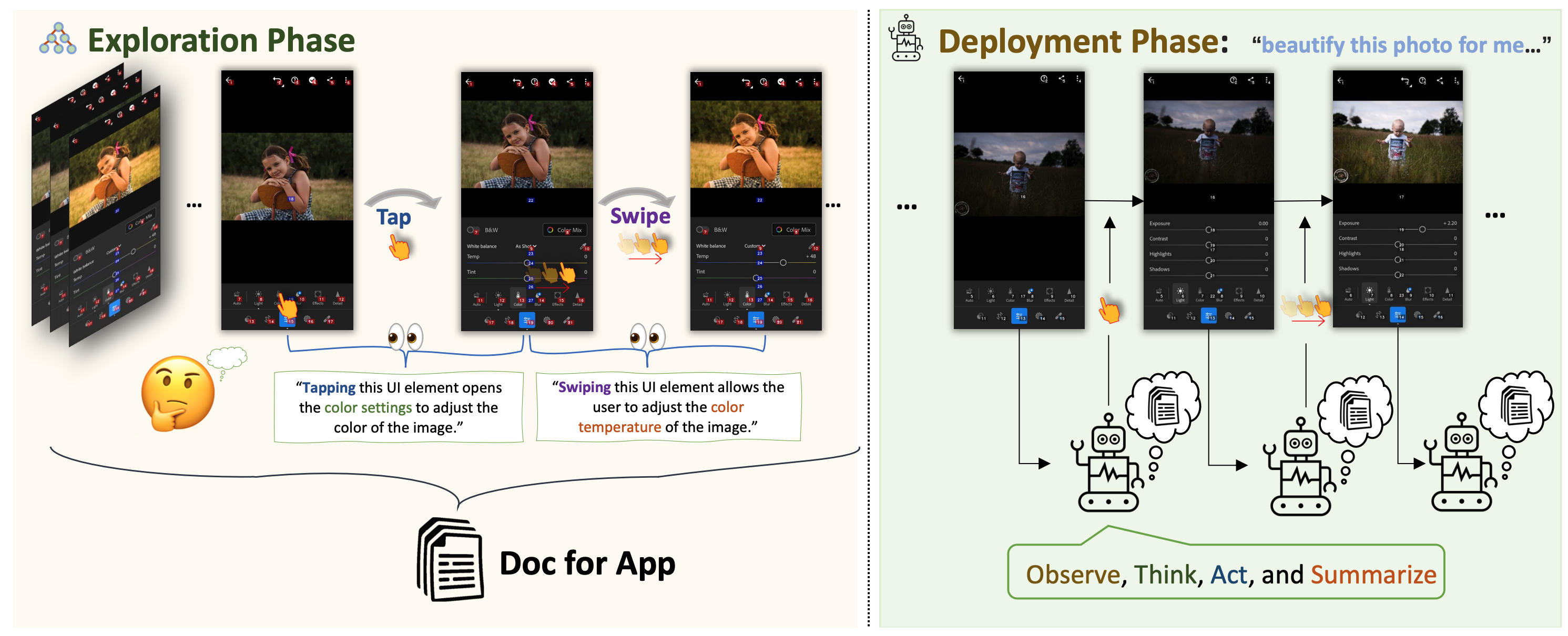

App Agent operates in two phases, named exploration phase and deployment phase, respectively. In the first phase, App Agent observes the interactions in the user interfaces of different apps. With sufficient observation, App Agent becomes adept at using an app. This knowledge is meticulously compiled into a document. Once this learning phase is complete, the agent is ready for action. In the second phase, App Agent is equipped to handle high-level tasks across any supported application. This methodical approach enables App Agent to efficiently complete a variety of complex tasks across different applications.

Demos of App Agent exploring and deploying on Gmail and X, two most commonly used daily apps.

@misc{yang2023appagent,

title={AppAgent: Multimodal Agents as Smartphone Users},

author={Chi Zhang and Zhao Yang and Jiaxuan Liu and Yucheng Han and Xin Chen and Zebiao Huang and Bin Fu and Gang Yu},

year={2023},

eprint={2312.13771},

archivePrefix={arXiv},

primaryClass={cs.CV}

}